Genetic

algorithms : tutorial

Keywords : tutorial, genetic algorithms, genetic algorithms, genetic classifiers, Charles Darwin, the origin of species, theory of evolution, William Bateson, genotype, phenotype, mutation, crossing over, reproduction, fitness

Written by Gerard YAHIAOUI and Pierre DA SILVA DIAS, main founders of the applied maths research company NEXYAD, © NEXYAD, all rights reserved : for any question, please CONTACT

Reproduction of partial or complete content of this page is authorized ONLY if "source : NEXYAD http://www.nexyad.com" is clearly mentionned.

This tutorial was written for students or engineers that wish to understand main hypothesis and ideas of Genetic Algorithms..

History and vocabulary

Keywords : tutorial, genetic algorithms, genetic algorithms, genetic classifiers, Charles Darwin, the origin of species, theory of evolution, William Bateson, genotype, phenotype, mutation, crossing over, reproduction, fitness

Written by Gerard YAHIAOUI and Pierre DA SILVA DIAS, main founders of the applied maths research company NEXYAD, © NEXYAD, all rights reserved : for any question, please CONTACT

Reproduction of partial or complete content of this page is authorized ONLY if "source : NEXYAD http://www.nexyad.com" is clearly mentionned.

This tutorial was written for students or engineers that wish to understand main hypothesis and ideas of Genetic Algorithms..

History and vocabulary

Human

beings use to

build complex structured things such as houses, towers, cars, TVs,

telephones, computers, or peanuts automatic distributors.

Structure of these things come from the work of "engineers" (in a general sense).

In the natural world, some people think that it is the same. A chief engineer would have created and built every structured things.

Physicians use the second principle of thermodynamics which implies that closed systems spontaneously evolve from structured state to desorder. And this principle would let think that structured things cannot appear spontaneously.This principle can be experimented in a laboratory. However, observation of "life" and "Universe" evolutions on a very large scale of space and time seem to show another way.

Indeed, observation of fossiles, for instance, shows that organisms seem to have evolved among time : life would have begun on Earth with very "simple" species (mono cellular organisms), and became more and more complex and structured with time (multi cellular organisms, plants, animals, human beings).

Astro-physicians seem to describe the same kind of observations about the Universe itself : according to the theories in force, at the "begining", there was just unstructured energy localized in a small volume (it means that space already existed !?) that evolved spontaneously into galaxies, solar systems, planets, ... (after a "Big Bang").

Maybe the concept of "closed" systems doesn't apply to "life" or "Universe", or maybe other principles may also apply and would prevail in these cases (critic mass effect, self organisation principle, ...). Or perhaps our perception of what is structured or unstructured is not good.

Concerning the evolution of life, "The theory of evolution" was proposed by Charles Darwin (The Origin of Species) in 1859. Darwin proposed a model where evolution would be the result of randomly drawn modifications that are filtered by the environment : individuals that are more viable than the others have more time to reproduce themselves, whereas individuals that are less viable mostly die before reproduction. After a while, only viable individuals exist. Such a model would lead to more and more adapted species. Ifever structure level is an item of adaptation, then this model would lead "spontaneously" (without engineer) to more and more structured solutions.

Although this model doesn't fit into every observation, it seems to "explain" (see MODELS in order to know what "'explain" means for a model) a significant number of species evolution facts.

Biologists wished then to study reproduction and especially inheritance and variation. The word "genetics" was first applied to describe the study of inheritance and the science of variation by English scientist William Bateson in a letter to Adam Sedgewick, dated April 18, 1905.

From this start, new models of inheritance and variation was developed, according to which the macroscopic characters of an individual are determined by a finite number of microscopic elements called the "genes". The set of macroscopic characters (size, colour of the eyes, number of legs, ...) is called the "phenotype". The set of microscopic characters (the genes) is called the genotype.

The searcher John H Holland, on the basis of both biological evolution theory and genetic research, invented the Genetic Algorithms in the 1960s, in order to build computer programs that would adapt themselves to perform a given function.

What is a problem ? What is a solution ?

First, let us assume that a solution to a problem can be described by a finite number of parameters. Every parameter is coded into a number (for instance, a binary number : 0 or 1, or a real number, ...).

Every list of numbers (of the right type and dimension) is a possible solution.

The problem can be considered as being the criterium of evaluation of a possible solution.

Example : for the second degree equation 2.x2 + x - 1 = 0 is a problem (finding x that leads to a proper evaluation of 2.x2 + x - 1).

NB : let us notice that this way of describing a problem is different from the "usual" presentation.

One way for solving this problem could be to "test every solution" : of course, for real numbers, the set is infinite and not countable, but the idea remains the same : some strategies may be used in order to find iteratively a solution (example : dichotomy) :

The above problem has 2 solutions :

x = -1

or

x = 1/2

Of course, for such a simple problem, this iterative solution research loop is much less efficient than using a formal mathematical method that directly solves the problem. But for real world applications, direct formal methods may be :

- not existing

- too long to compute

- depending on values (example : "fifty ways to invert a matrix")

- hard to apply in the general case of "noise" (example : a simple Kramer system that should have a determinant equal to zero becomes a system with a determinant about equal to zero => it may be positive or negative : and lead to a solution whereas this solution shouldn't exist ...)

Iterative methods are made to find an approximation of a solution in a given time : for the above problem, an acceptable solution leads to an evaluated value "close to" 0. Then -1,0002 may be an acceptable solution.

Beware, such an iterative method may give a "solution" even if there is no solution : the minimum value for the error (see figure above) is the "best" possible solution.

For instance, the equation x2 + 20 = 0 has no solution in the set of real number (whereas it accepts solutions in the set of complex numbers) :

A loop of iterative approximation of the solution should converge to x=0, with a residual value (an error) y=20 (that will be found as the "best" possible approximation of 0).

So one can see that the interpretation of solutions given by iterative systems that do not prove the existance of the solution (and even that often do not prove their ability to convergence ...) is not that simple.

Genetic algorithms

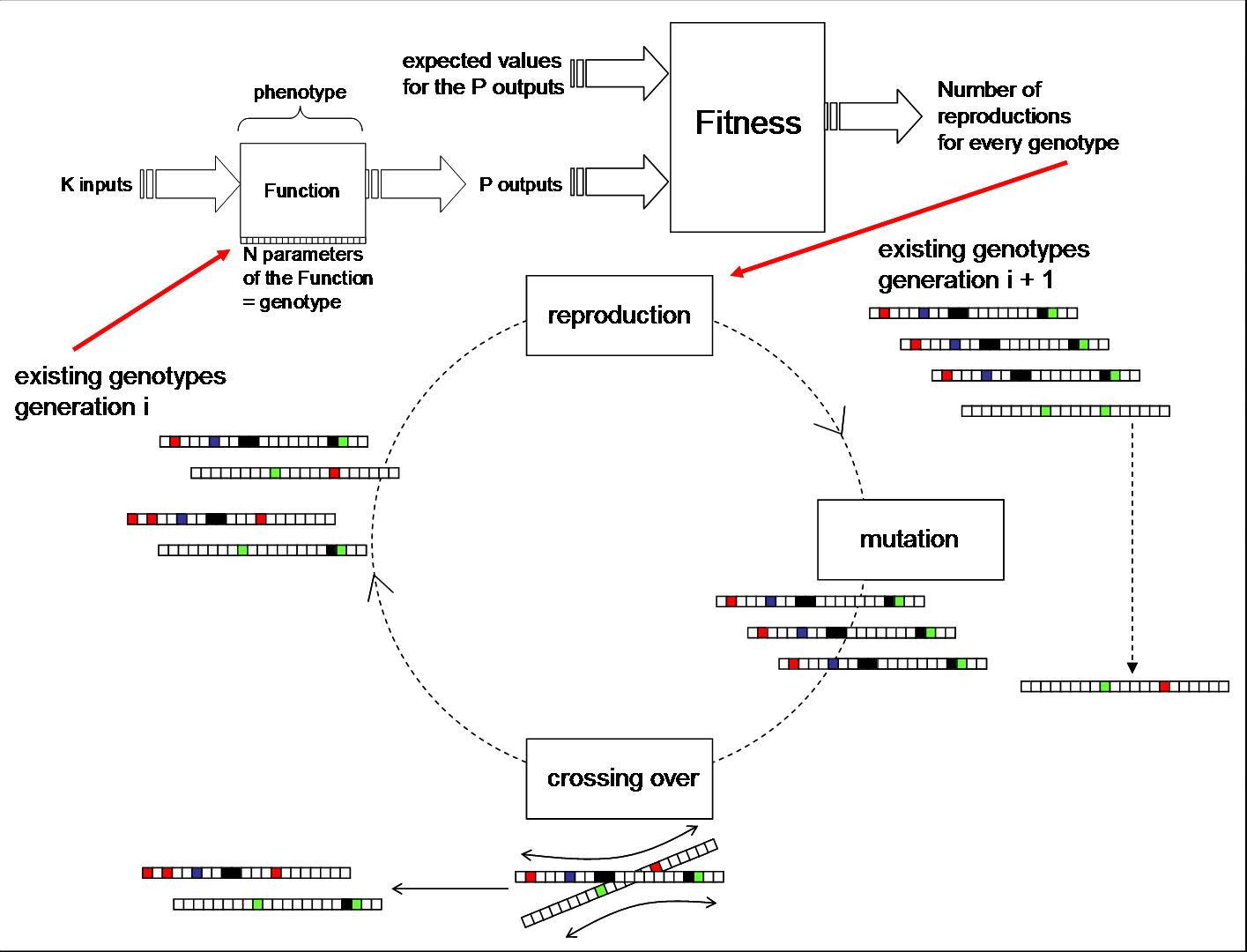

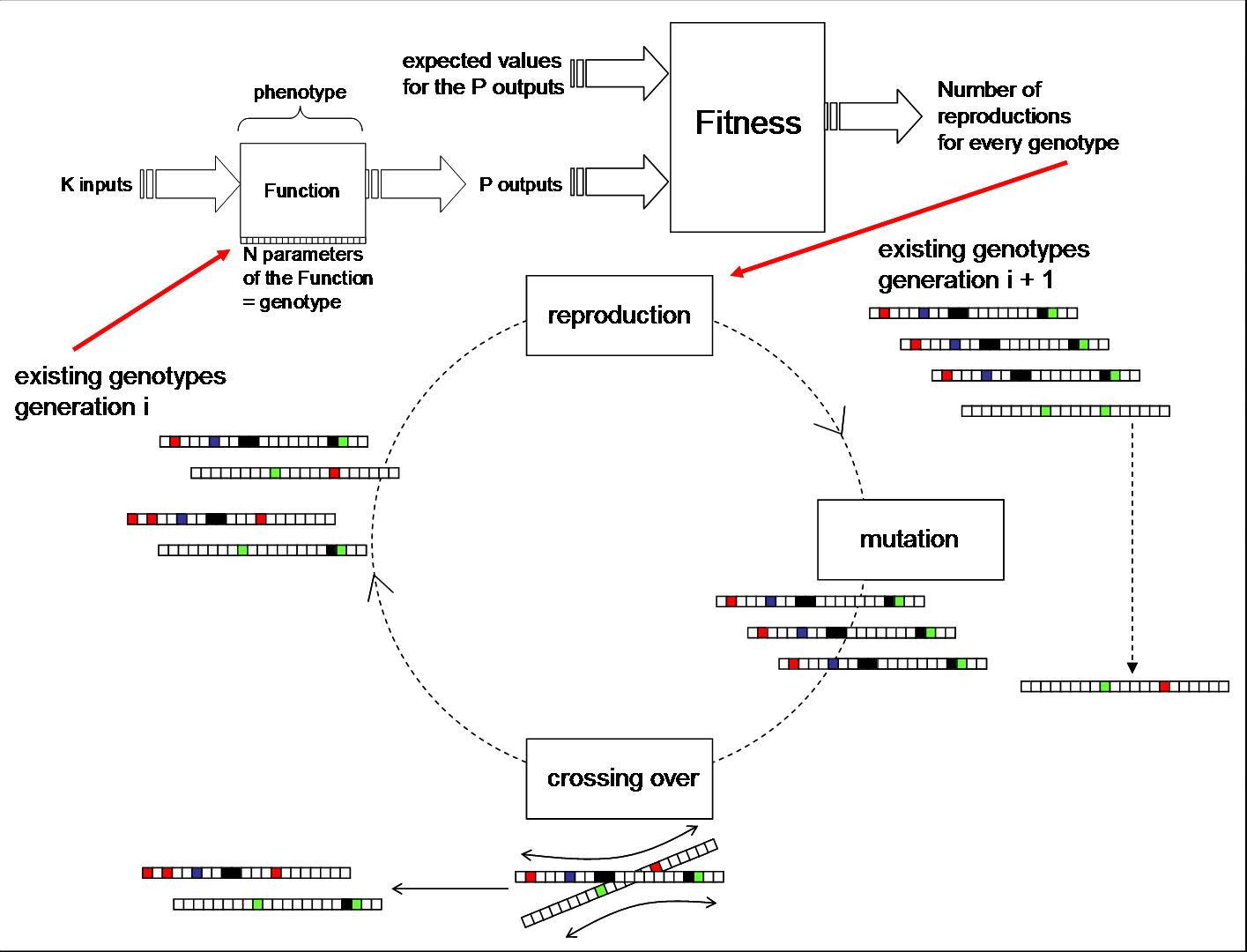

Genetic algorithms are iterative loops of optimisation : a fitness function measures the adaptation of a solution to the problem needs. Every solution is represented by a set of numbers that we will call a set of "parameters". These parameters are called "genes".

There exist a "function" that uses the parameters. The "function" is called the phenotype, and the problem consist in finding phenotypes adapted to the problem.

Phenotypes are filtered by the fitness function that reproduces the most adapted phenotypes. The more the adpatation the more the reproduction. Phenotypes are modified through 2 ways :

- mutation : it consists in moving one parameter through a random modification,

- crossing over :

The complete cycle is then :

Special characteristics of GEs

The main characteristics of genetic algorithms are :

- several "solutions" (several genotypes, and then several phenotypes) are existing together,

- several solutions are mixed together using the crossing over procedure : it leads to new genotypes made with parts of only viable solutions (the "research" is focused on viable solutions). It is a way to make fast f of existing solutions with a probability of good fitness that is generally much higher than it would be for a completely random modification (with the same range). NB : this "high probability" of good fitness through crossing over is only true if there exist a "topology" in the set of genes : it means that very close genotypes must lead to very close phenotypes. If (when) this property is not true, then crossing over can be considered as a fast random variation (and it often leads to a solution that is eliminated by the fitness function.

- the reproduction may lead to a big number of identical genotypes (because their phenotype is well adapted to the problem). Two identical genotypes will stay unchanged through any crossing over, and then will only change through mutations (slowly),

- it is possible to use "wild cards" in genotypes : a wild card is a gene that has no value : it represents implicitely all the possible values for this gene. For the fitness computation, the "wild carded" genotype is then replaced with all the solutions that it represents, and the reproduction is made with the max of the fitness function. The "wild carded" genotype is a "familly" of genotypes.

NB : There is no proof of genetic algorithms convergence.

In fact :

Genetic algorithms were not made to converge at all !. They are an infinite loop that screens a very big combination of possibilities, and localy optimize a SET of already quite viable solutions in order to build a set of better solutions. They never stop to evolve.

Genetic algorithms scientific papers often speak about "robustness". Robustness doesn't have the same meaning in several applied maths fields. In the case of GEs, it is often the term to say "ability to work for a slightly different problem" : indeed, in real life, the biotop is always changing (usually very slowly, sometimes fast), and the problem that has to be solved by natural animals is to stay alive. But in practice, because the world is always changing, the problem is changing too.

For this reason, GEs users often apply heuristics that avoid to converge (yes you've read correctly !), and convergence to a very good solution is considered as having a very adapted species. The most a species is adapted to a given problem, the less the probability that it would be adapted to another one ... Observation of Nature seems to show the example of "super species" that were very well adapted to their environment. All individuals tend to be very similar, and it becomes hard to move from this solution using crossing over for instance. Such a species is very fragile in case of modifications of the environment. This super species birth is considered by scientists as being the reason of many species death.

So, in fact, the aim of genetic algorithms is "only" to find a set of acceptable solutions, that is a set of individuals that are significantly different and enough viable. The aim is not to find neither THE solution nor A solution.

Using genetic algorithms in order to solve a known problem with a known unique solution is not a real good benchmark : genetic algorithms may be better or worse, depending on the chararcteristics of the formal known solution.

But genetic algorithms are interesting to apply in the case of not solvable problems : there is no unique solution, there is no solution in a formal way (error = zero doesn't mean anything on many real world projects where noise is very important), and all what is expected is several "viable" solutions. And they are very interesting in the case of a problem that may change among time.

Example :

- automatic programming : build automatically a C++ program that should produce a "known" result on a given set of inputs,

- genetic classifiers : expert systems used in "artificial life" researches,

- complex local optimisation problems,

Genetic classifiers

Genetic classifiers are often used in "artificial life" projects : the aim of these projects is to build an artificial environment and artificial animals, with an initial behaviour that is randomly initialized, and see how species with quite understandable behaviours may survive/appear.

A genetic classifier is a set of input/ output rules that are modified by a genetic algorithm :

It is just an application of genetic algorithms where phenotypes are rule-based systems and where genotypes are rules (rules are the parameters of the rule-based system).

Every individual is a genetic classifier that lets its rules evolve.

Individuals that do not have a behaviour that lets them find food and that lets escape from predators disappear fast.

NEXYAD team studied genetic classifiers on artificial life projects (with no application at all ! yes we do this sometimes !) : cats, mice, and cheese world.

It is very interesting to notice that "silly mice" could always find cheese, even with a random behaviour. Cats with a random behaviour always died (they never caught a mouse) and we had to create millions of initial random cats :-)

So rules of cats begin to evolve first.

When cats begin to run after mice (that always move randomly) and catch them, then mice behaviour rules begin to evolve : the 2 species doesn't evolve simulaneously.

NB : creating "cats" and "mice" we didn't initialize the problem completely randomly (we decided that cats should eat mice and that mice should eat cheese ...) : see our paper (in french) : "le jeu du chat et de la souris" : scientificbackground.html

Structure of these things come from the work of "engineers" (in a general sense).

In the natural world, some people think that it is the same. A chief engineer would have created and built every structured things.

Physicians use the second principle of thermodynamics which implies that closed systems spontaneously evolve from structured state to desorder. And this principle would let think that structured things cannot appear spontaneously.This principle can be experimented in a laboratory. However, observation of "life" and "Universe" evolutions on a very large scale of space and time seem to show another way.

Indeed, observation of fossiles, for instance, shows that organisms seem to have evolved among time : life would have begun on Earth with very "simple" species (mono cellular organisms), and became more and more complex and structured with time (multi cellular organisms, plants, animals, human beings).

Astro-physicians seem to describe the same kind of observations about the Universe itself : according to the theories in force, at the "begining", there was just unstructured energy localized in a small volume (it means that space already existed !?) that evolved spontaneously into galaxies, solar systems, planets, ... (after a "Big Bang").

Maybe the concept of "closed" systems doesn't apply to "life" or "Universe", or maybe other principles may also apply and would prevail in these cases (critic mass effect, self organisation principle, ...). Or perhaps our perception of what is structured or unstructured is not good.

Concerning the evolution of life, "The theory of evolution" was proposed by Charles Darwin (The Origin of Species) in 1859. Darwin proposed a model where evolution would be the result of randomly drawn modifications that are filtered by the environment : individuals that are more viable than the others have more time to reproduce themselves, whereas individuals that are less viable mostly die before reproduction. After a while, only viable individuals exist. Such a model would lead to more and more adapted species. Ifever structure level is an item of adaptation, then this model would lead "spontaneously" (without engineer) to more and more structured solutions.

Although this model doesn't fit into every observation, it seems to "explain" (see MODELS in order to know what "'explain" means for a model) a significant number of species evolution facts.

Biologists wished then to study reproduction and especially inheritance and variation. The word "genetics" was first applied to describe the study of inheritance and the science of variation by English scientist William Bateson in a letter to Adam Sedgewick, dated April 18, 1905.

From this start, new models of inheritance and variation was developed, according to which the macroscopic characters of an individual are determined by a finite number of microscopic elements called the "genes". The set of macroscopic characters (size, colour of the eyes, number of legs, ...) is called the "phenotype". The set of microscopic characters (the genes) is called the genotype.

The searcher John H Holland, on the basis of both biological evolution theory and genetic research, invented the Genetic Algorithms in the 1960s, in order to build computer programs that would adapt themselves to perform a given function.

What is a problem ? What is a solution ?

First, let us assume that a solution to a problem can be described by a finite number of parameters. Every parameter is coded into a number (for instance, a binary number : 0 or 1, or a real number, ...).

Every list of numbers (of the right type and dimension) is a possible solution.

The problem can be considered as being the criterium of evaluation of a possible solution.

Example : for the second degree equation 2.x2 + x - 1 = 0 is a problem (finding x that leads to a proper evaluation of 2.x2 + x - 1).

NB : let us notice that this way of describing a problem is different from the "usual" presentation.

One way for solving this problem could be to "test every solution" : of course, for real numbers, the set is infinite and not countable, but the idea remains the same : some strategies may be used in order to find iteratively a solution (example : dichotomy) :

The above problem has 2 solutions :

x = -1

or

x = 1/2

Of course, for such a simple problem, this iterative solution research loop is much less efficient than using a formal mathematical method that directly solves the problem. But for real world applications, direct formal methods may be :

- not existing

- too long to compute

- depending on values (example : "fifty ways to invert a matrix")

- hard to apply in the general case of "noise" (example : a simple Kramer system that should have a determinant equal to zero becomes a system with a determinant about equal to zero => it may be positive or negative : and lead to a solution whereas this solution shouldn't exist ...)

Iterative methods are made to find an approximation of a solution in a given time : for the above problem, an acceptable solution leads to an evaluated value "close to" 0. Then -1,0002 may be an acceptable solution.

Beware, such an iterative method may give a "solution" even if there is no solution : the minimum value for the error (see figure above) is the "best" possible solution.

For instance, the equation x2 + 20 = 0 has no solution in the set of real number (whereas it accepts solutions in the set of complex numbers) :

A loop of iterative approximation of the solution should converge to x=0, with a residual value (an error) y=20 (that will be found as the "best" possible approximation of 0).

So one can see that the interpretation of solutions given by iterative systems that do not prove the existance of the solution (and even that often do not prove their ability to convergence ...) is not that simple.

Genetic algorithms

Genetic algorithms are iterative loops of optimisation : a fitness function measures the adaptation of a solution to the problem needs. Every solution is represented by a set of numbers that we will call a set of "parameters". These parameters are called "genes".

There exist a "function" that uses the parameters. The "function" is called the phenotype, and the problem consist in finding phenotypes adapted to the problem.

Phenotypes are filtered by the fitness function that reproduces the most adapted phenotypes. The more the adpatation the more the reproduction. Phenotypes are modified through 2 ways :

- mutation : it consists in moving one parameter through a random modification,

- crossing over :

The complete cycle is then :

Special characteristics of GEs

The main characteristics of genetic algorithms are :

- several "solutions" (several genotypes, and then several phenotypes) are existing together,

- several solutions are mixed together using the crossing over procedure : it leads to new genotypes made with parts of only viable solutions (the "research" is focused on viable solutions). It is a way to make fast f of existing solutions with a probability of good fitness that is generally much higher than it would be for a completely random modification (with the same range). NB : this "high probability" of good fitness through crossing over is only true if there exist a "topology" in the set of genes : it means that very close genotypes must lead to very close phenotypes. If (when) this property is not true, then crossing over can be considered as a fast random variation (and it often leads to a solution that is eliminated by the fitness function.

- the reproduction may lead to a big number of identical genotypes (because their phenotype is well adapted to the problem). Two identical genotypes will stay unchanged through any crossing over, and then will only change through mutations (slowly),

- it is possible to use "wild cards" in genotypes : a wild card is a gene that has no value : it represents implicitely all the possible values for this gene. For the fitness computation, the "wild carded" genotype is then replaced with all the solutions that it represents, and the reproduction is made with the max of the fitness function. The "wild carded" genotype is a "familly" of genotypes.

NB : There is no proof of genetic algorithms convergence.

In fact :

Genetic algorithms were not made to converge at all !. They are an infinite loop that screens a very big combination of possibilities, and localy optimize a SET of already quite viable solutions in order to build a set of better solutions. They never stop to evolve.

Genetic algorithms scientific papers often speak about "robustness". Robustness doesn't have the same meaning in several applied maths fields. In the case of GEs, it is often the term to say "ability to work for a slightly different problem" : indeed, in real life, the biotop is always changing (usually very slowly, sometimes fast), and the problem that has to be solved by natural animals is to stay alive. But in practice, because the world is always changing, the problem is changing too.

For this reason, GEs users often apply heuristics that avoid to converge (yes you've read correctly !), and convergence to a very good solution is considered as having a very adapted species. The most a species is adapted to a given problem, the less the probability that it would be adapted to another one ... Observation of Nature seems to show the example of "super species" that were very well adapted to their environment. All individuals tend to be very similar, and it becomes hard to move from this solution using crossing over for instance. Such a species is very fragile in case of modifications of the environment. This super species birth is considered by scientists as being the reason of many species death.

So, in fact, the aim of genetic algorithms is "only" to find a set of acceptable solutions, that is a set of individuals that are significantly different and enough viable. The aim is not to find neither THE solution nor A solution.

Using genetic algorithms in order to solve a known problem with a known unique solution is not a real good benchmark : genetic algorithms may be better or worse, depending on the chararcteristics of the formal known solution.

But genetic algorithms are interesting to apply in the case of not solvable problems : there is no unique solution, there is no solution in a formal way (error = zero doesn't mean anything on many real world projects where noise is very important), and all what is expected is several "viable" solutions. And they are very interesting in the case of a problem that may change among time.

Example :

- automatic programming : build automatically a C++ program that should produce a "known" result on a given set of inputs,

- genetic classifiers : expert systems used in "artificial life" researches,

- complex local optimisation problems,

Genetic classifiers

Genetic classifiers are often used in "artificial life" projects : the aim of these projects is to build an artificial environment and artificial animals, with an initial behaviour that is randomly initialized, and see how species with quite understandable behaviours may survive/appear.

A genetic classifier is a set of input/ output rules that are modified by a genetic algorithm :

It is just an application of genetic algorithms where phenotypes are rule-based systems and where genotypes are rules (rules are the parameters of the rule-based system).

Every individual is a genetic classifier that lets its rules evolve.

Individuals that do not have a behaviour that lets them find food and that lets escape from predators disappear fast.

NEXYAD team studied genetic classifiers on artificial life projects (with no application at all ! yes we do this sometimes !) : cats, mice, and cheese world.

It is very interesting to notice that "silly mice" could always find cheese, even with a random behaviour. Cats with a random behaviour always died (they never caught a mouse) and we had to create millions of initial random cats :-)

So rules of cats begin to evolve first.

When cats begin to run after mice (that always move randomly) and catch them, then mice behaviour rules begin to evolve : the 2 species doesn't evolve simulaneously.

NB : creating "cats" and "mice" we didn't initialize the problem completely randomly (we decided that cats should eat mice and that mice should eat cheese ...) : see our paper (in french) : "le jeu du chat et de la souris" : scientificbackground.html

For

more questions or applications,

please feel free to contact

us